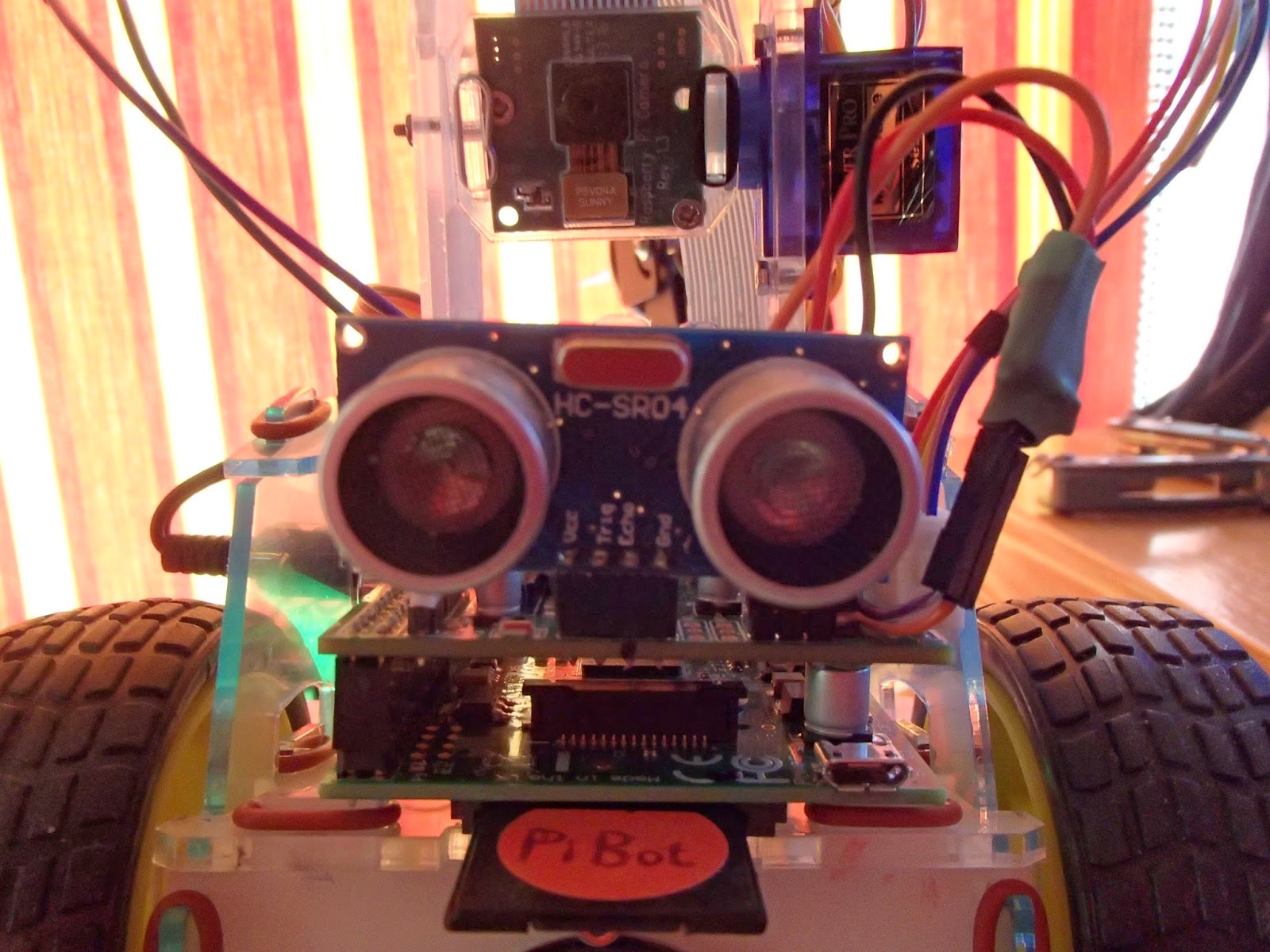

I got the camera working with the PiBot! I came across a fantastic python library for the RasPiCam, written by Dave Hughes (or is it Davy Jones?) HERE. This is an extensive and well-documented (see HERE) library called simply picamera, and it allows RasPiCam control to be conveniently incorporated into python programs.

To install picamera, I did the following on the Pi:

$ sudo apt-get install python3-picameraTo update the operating system when new releases of picamera (and other software) are made, use the following:

$ sudo apt-get update && sudo apt-get upgrade

and to update the Raspberry Pi's firmware, use

$ sudo rpi-updateI normally do the last two commands together at regular intervals. Incidentally, there has recently been a PiCam update, enabling more modes of use of the RasPi Cam. See HERE

Here's the very first movie attempt:

Here's the code which is simply a modification of the previous program - ie using the ultrasonic transducer to navigate without bashing into obstacles and mangling my SD card:

The picamera library is imported at line 12, the camera object is created at line 39, and line 41 sets the pixel values for the video's resolution. The camera preview is started at line 42, and it starts recording at line 43, making a video file called foo.h264. The orientation of the PiCam is such that the image is upside-down when looking forward, so the vertical and horizontal flips are required at lines 44 and 45. The camera stops recording at line 64 and is shut down at line 65. Dave's advice is always to use the picamera.PiCamera.close() method on the PiCamera object - as in line 66 - to 'clean up resources'. And that's it - the video runs for as long as the movement part of the program executes - about 45 seconds at the moment.

This then produces a video file named foo.h264, of the type that we came across in earlier blog posts. A convenient way of 'wrapping' this file as an .mp4 file is to first go to the directory where the foo.h264 file lives, ie

cd python-spi/bot-command/examples

and use the raspberry pi line command

MP4Box -add filename.h264 filename.mp4

for which it's necessary to install the gpac package on the RasPi.

Then, to transfer the foo.mp4 file to the PC for playing on any one of a number of movie players, or for uploading to the web, I used the WinSCP program, which I introduced in an earlier blog post (see HERE), running on the PC, and which works just like an FTP program, allowing you to drag 'n drop files from one computer to another. The foo.mp4 file is 11,322 kB in size.

Here's another video, processed with VideoPad and saved as a .avi file - with music!!:

I must try to get our cat in the picture, some time when she's not sleeping...